🧠 Neural Networks Explained: A Beginner’s Guide to Machine Learning and AI

🧠 What Are Neural Networks? A Beginner’s Guide to AI’s Brainpower

Introduction

Neural networks are a core part of machine learning and deep learning, inspired by the way the human brain processes information. Just as our brain uses neurons to transmit signals and learn patterns, neural networks utilise artificial neurons (or nodes) connected in layers to perform tasks such as image recognition, language understanding, and more.

🧩 Key Components of Neural Networks

A neural network consists of interconnected nodes arranged in layers. Let’s break it down:

🔘 Neurons (Nodes)

Each node receives one or more inputs, applies a transformation (typically a weighted sum and an activation function), and passes the output to the next layer. These nodes are the fundamental building blocks of a neural network.

📥 Input Layer

This is where data enters the network. For example, in image recognition, the input layer might contain values for each pixel of the image.

🧠 Hidden Layers

Hidden layers perform the actual computation. A simple neural network might have just one hidden layer, while deep learning models can have dozens or even hundreds of layers. Each layer refines the data, learning progressively more complex features.

📤 Output Layer

The output layer produces the final prediction or classification. In a binary classification task, it might be a single neuron that outputs a value close to 0 or 1.

⚖️ Weights and Biases

Every connection between neurons has a weight, which determines the importance of that connection. Biases help shift the activation function, improving the model’s flexibility. These parameters are adjusted during training to minimise errors.

⚙️ How Neural Networks Work

Neural networks learn in two main phases: forward propagation and backpropagation.

🔄 Forward Propagation

Data flows from the input layer to the output layer. Each neuron performs a weighted sum of its inputs, applies an activation function (like ReLU, sigmoid, or tanh), and passes the result forward.

For example, in an image classifier:

-

The input layer receives pixel values.

-

Hidden layers identify patterns like edges or shapes.

-

The output layer predicts the category (e.g., cat, dog, or car).

🔁 Backpropagation

After a prediction is made, the network calculates the error between its prediction and the true value using a loss function (e.g., mean squared error or cross-entropy). Then:

-

The error is propagated back through the network.

-

Weights are updated using gradient descent to reduce the loss.

-

This process repeats over many iterations, allowing the model to learn.

⚡ Activation Functions

Activation functions add non-linearity to the model, enabling it to solve complex problems.

-

ReLU (Rectified Linear Unit): Outputs 0 for negative inputs, and the input itself if positive. Fast and effective, often used in hidden layers.

-

Sigmoid: Maps inputs to values between 0 and 1. Suitable for binary classification.

-

Tanh (Hyperbolic Tangent): Similar to sigmoid but ranges from -1 to 1, often used in deeper layers to centre the data.

🧪 Building a Simple Neural Network in Python

Let’s build a simple neural network using TensorFlow, one of the most popular deep learning libraries.

What This Code Does:

-

Input: 10 features.

-

Hidden layer: 8 neurons using ReLU.

-

Output layer: 1 neuron using sigmoid (perfect for spam/not spam).

-

Loss function:

binary_crossentropy— Ideal for binary classification. -

Optimizer:

adam— An efficient and adaptive gradient descent method. -

Training: Done with

fit()your data.

This is a great starting point to explore deep learning projects.

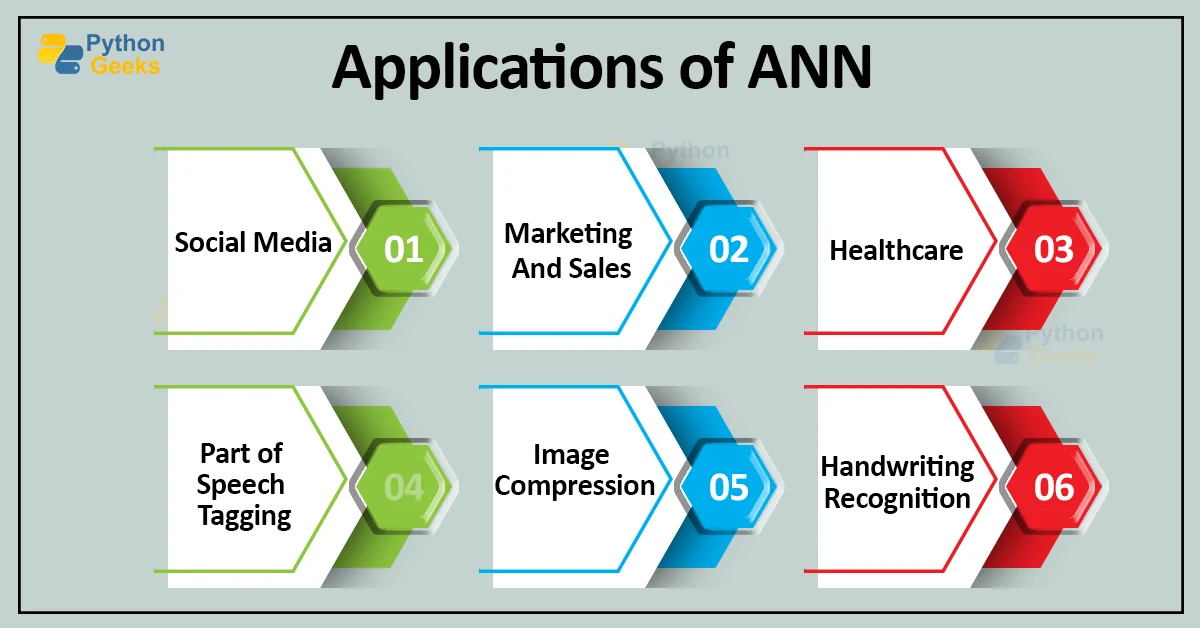

🚀 Applications of Neural Networks

Neural networks power many modern AI systems. Here are key areas where they shine:

🖼️ Image Recognition

Used in facial recognition, self-driving cars, and medical diagnostics. Convolutional Neural Networks (CNNs) are especially effective here.

🗣️ Natural Language Processing (NLP)

Neural networks are used in language translation, sentiment analysis, summarisation and chatbots. Models like LSTM and Transformers are designed for language-based tasks.

🎤 Speech Recognition

Virtual assistants like Siri and Alexa rely on deep neural networks to understand and process speech input.

🧠 Others:

-

Fraud detection

-

Game playing (e.g., AlphaGo)

-

Stock market prediction

-

Personalized recommendations

🔮 Future of Neural NetworksNeural networks are evolving quickly, and the future holds even more exciting possibilities:

-

Explainable AI: Understanding how a neural network makes decisions.

-

Neuromorphic Computing: Mimicking real brain structure in hardware.

-

Real-time Personal AI Assistants: Highly responsive and personalised.

As more computing power becomes available and datasets grow, neural networks will continue to outperform traditional models in various domains.

Neural networks are evolving quickly, and the future holds even more exciting possibilities:

-

Explainable AI: Understanding how a neural network makes decisions.

-

Neuromorphic Computing: Mimicking real brain structure in hardware.

-

Real-time Personal AI Assistants: Highly responsive and personalised.

As more computing power becomes available and datasets grow, neural networks will continue to outperform traditional models in various domains.

✅ Conclusion

Neural networks are at the heart of artificial intelligence. They allow computers to learn from data, recognise patterns, and make decisions in ways that were once thought to be uniquely human. From image classification to voice recognition and even language understanding, neural networks are transforming industries and opening up new possibilities.

:max_bytes(150000):strip_icc()/dotdash_Final_Neural_Network_Apr_2020-01-5f4088dfda4c49d99a4d927c9a3a5ba0.jpg)

The explaination throughout is good and easy to understand

ReplyDeleteThank you! I’m really glad the explanation came across clearly and was easy to follow. Appreciate your feedback!

DeleteThis actually helped me to build a good foundation, so that I can dive more deeper into it with quiet understanding

ReplyDeleteWe are really glad to hear that! It’s great to know the content helped lay a solid foundation for you. Wishing you the best as you dive deeper—keep exploring and learning!

Delete